November update: Fun with Docker

Posted by David Zaslavsky on — CommentsIn honor of November, which used to be my NaBloWriMo (“National” Blog Writing Month), I’m determined to exceed my usual output of zero posts per month.

A haphazard introduction to Docker

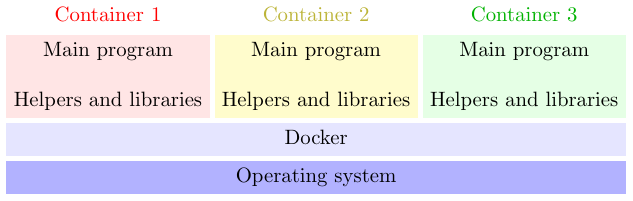

My latest hobby project has been changing this website to use Docker. For anyone who doesn’t know, Docker is a containerization system, which means that it lets you run programs in their own individual “containers” that prevent them from interacting with other programs running on the same computer (except when you specifically allow it). For example, a file stored in a container is typically accessible only within that container, each container has its own set of network ports to send and receive communication, and so on. Containers are a lot like virtual machines, but whereas a virtual machine runs an entire operating system — that includes a kernel plus any number of programs — Docker runs only one primary program in each isolated environment, and when that program needs a kernel to do something like reading data from a file, it uses the underlying host system’s kernel. So you might think of containers as “partly-virtual machines”.

The isolated environment of a container works the other way around, too: files and ports and so on that are available on the host system are invisible inside the container (again, unless you configure it otherwise). This means that all helper programs and data files and everything else a container needs, other than the operating system kernel itself, needs to be made available inside the container. Docker has a handy way of doing this using images, which are like archives that contain all the files that you want to exist in a container when it first starts up. And this leads to one of the key advantages of using Docker or a similar system based on containers and images: the images are self-contained and fully reusable, so they don’t care what underlying host server you run them on. If you have to, say, change from an old computer to a new one, there’s no need to spend a lot of time figuring out which configuration settings you need to copy from the old computer and which programs you need to install to get the new one to work.

What I’ve done with this website is replace the old standalone Caddy executable with a Caddy Docker container made from an image I downloaded from Docker Hub, the old MariaDB database server with a MariaDB Docker container also made from an image from Docker Hub, and the Textwriter and Modulo services which power parts of the site’s backend with containers made from custom images of that software. All of their configurations are listed in a docker-compose file. This way, whatever happens to the underlying server, I can just copy the docker-compose file and a few data files (the SQL database and the site content) to the new server and everything is ready to go with one command. Easier said than done, of course, but it’s also much easier done than it used to be under the old system where I’d have to go poking around the whole system for relevant configuration files. (I’m still not sure I got them all.)

Container lessons

In the process I’ve learned a few things about how to replace my traditional programs, which I would normally run on a plain old Linux system, with equivalent programs that run in Docker containers.

- I believe this is what people mean by “dockerizing”. Certain people love their buzzwords....

- Programs and configuration files which are relatively unchanging go in containers; data files which need frequent updates done outside the container are better left out. If you put your data files in a container, then every time you want to update the data, as far as I can tell, you have to (ish) update the image that the container is based on, stop the container, and then restart it with the newer image, which is a pain to do and also means extra downtime every time you do an update. On the other hand, bind-mounting a data directory (from the host system) into the container means you can update the data with any normal method like rsync, plus you don’t have to use hard drive space saving every version of the data files. I learned this the hard way by trying to build my website content into an image, but eventually I settled on just using rsyncing files like I used to do.

- docker-compose files are great for keeping a record of both the image and the settings used to create a container. This is in addition to their intended purpose of configuring a set of containers that are designed to work together (which I think is called “container orchestration”).

- Containers are often used to run servers, but they’re also handy for setting up a compiler or data processor in a completely reproducible environment. You can bind mount the source and destination directories from the host system, but everything else including the configuration is in the container.

I bet that container apps are only going to get more popular in the near future, so I plan to be tinkering with this technology a lot more.

Other notes

Now that I’ve gotten the hang of updating my website with Docker, I’m hoping to start making occasional posts again. I’ve got some ideas lined up, including my departure from Physics Stack Exchange, my perspective of doing technical interviews, and a series of Christmas music recommendations which I might be able to run this year if I can get things set up quickly enough.